|

Eunsu Kim

|

|

AffiliationsCarnegie Mellon University (CMU)Visiting Scholar in HCII Computer Science, Host professor: Sherry Wu 2025.09-present Korea Advanced Institute of Science and Technology (KAIST)M.S. in Computer Science, Advisor: Alice Oh 2023.09-present B.S. in Electrical Engineering 2019.03–2023.08 ● GPA: 4.02/4.3, Major GPA: 4.15/4.3 (Summa Cum Laude) |

Latest News!

🏆 Two papers will be presented in Neurips workshop! 🇺🇸 Starting as a visiting student at Carnegie Mellon University (CMU)! Sep 2025

🏆 Two papers accepted to EMNLP 2025 Findings!

🏆 Three papers accepted to ACL 2025 — two in Findings and one as Main (Oral)! 🏆 `When Tom Eats Kimchi' paper got outstanding paper awards in NAACL C3NLP! Congrats to my interns! Mar 2025 |

Selected Publications (See All)* denotes equal contributions |

|

Eunsu Kim*, Haneul Yoo*, Guijin Son, Hitesh Patel, Amit Agarwal, Alice Oh Preprint, Under Review paper |

|

Seyoung Song*, Seogyeong Jeong*, Eunsu Kim, Jiho Jin, Dongkwan Kim, Jay Shin, Alice Oh EMNLP 2025(Findings) paper |

|

Zahra Bayramli*, Ayhan Suleymanzade*, Na Min An, Huzama Ahmad, Eunsu Kim, Junyeong Park, James Thorne, Alice Oh ACL 2025 (Oral), NAACL 2025 c3NLP workshop arXiv TL;DR |

|

Eunsu Kim, Juyoung Suk, Seungone Kim, Niklas Muennighoff, Dongkwan Kim, Alice Oh ACL 2025 Findings arXiv codebase TL;DR |

|

Juhyun Oh*, Eunsu Kim*, Jiseon Kim, Wenda Xu, William Yang Wang, Alice Oh EMNLP 2025 (Findings) arXiv |

|

Junho Myung, Nayeon Lee, Yi Zhou, Jiho Jin, Rifki Afina Putri, Dimosthenis Antypas, Hsuvas Borkakoty,Eunsu Kim, Carla Perez-Almendros, Abinew Ali Ayele, Víctor Gutiérrez-Basulto, Yazmín Ibáñez-García, Hwaran Lee, Shamsuddeen Hassan Muhammad, Kiwoong Park, Anar Sabuhi Rzayev, Nina White, Seid Muhie Yimam, Mohammad Taher Pilehvar, Nedjma Ousidhoum, Jose Camacho-Collados, Alice Oh Neurips D&B, 2025 arXiv Dataset TL;DR |

|

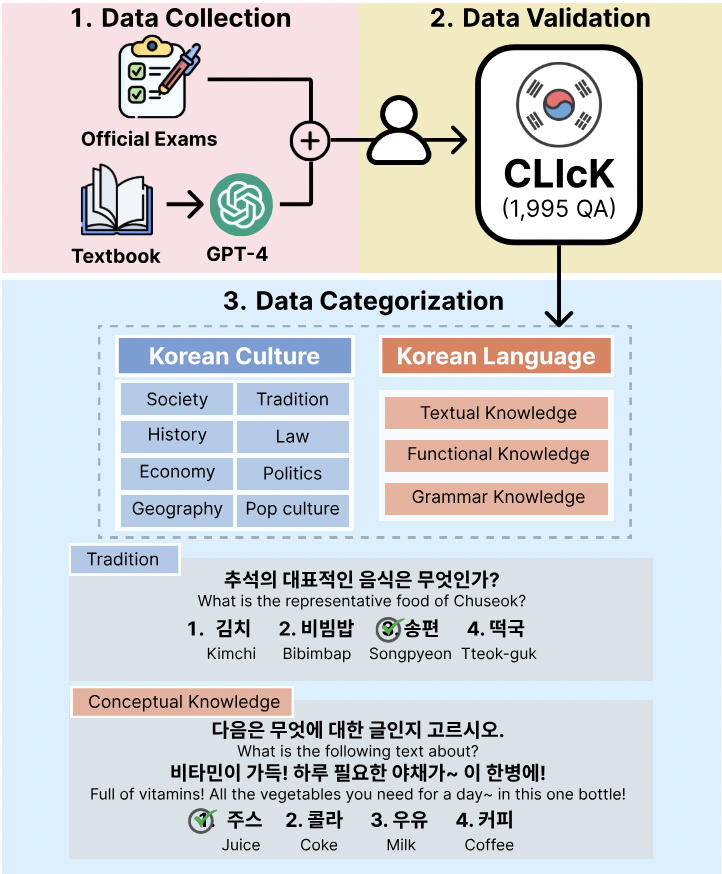

Eunsu Kim, Juyoung Suk, Philhoon Oh, Haneul Yoo, James Thorne, Alice Oh LREC-COLING 2024 arXiv Dataset TL;DR |

MiscBesides research, I love bread 🥯🥐🥨, table tennis 🏓, and learning new sports. I recently started tennis and yoga!

|